The Medical FuturistExploratory3 min read

Key Takeaway:

AI algorithms are transforming healthcare by improving diagnostics and patient care, with significant advancements expected in disease prediction over the next few years.

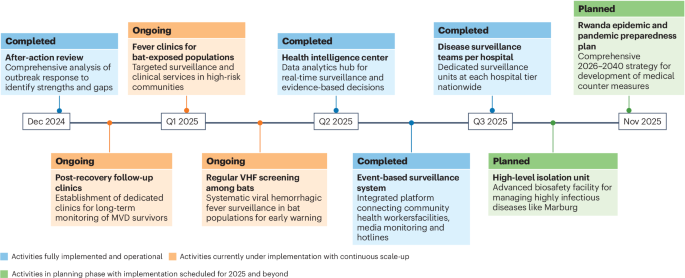

The study, "Top Smart Algorithms In Healthcare," conducted by The Medical Futurist, examines the integration and impact of artificial intelligence (AI) algorithms within the healthcare sector, highlighting their potential to enhance diagnostics, patient care, and disease prediction. This research is pivotal as it underscores the transformative capacity of AI technologies in addressing critical challenges in healthcare, such as improving diagnostic accuracy, optimizing treatment plans, and forecasting disease outbreaks, thereby contributing to more efficient and effective healthcare delivery.

The methodology employed in this analysis involved a comprehensive review of the current AI algorithms utilized in healthcare, focusing on their application areas, performance metrics, and clinical outcomes. The study synthesized data from various sources, including peer-reviewed articles, clinical trial results, and expert interviews, to compile a list of leading algorithms that demonstrate significant promise in clinical settings.

Key findings from the study reveal that AI algorithms have achieved substantial advancements in several domains. For instance, algorithms developed for imaging diagnostics, such as those for detecting diabetic retinopathy and skin cancer, have achieved accuracy rates exceeding 90%, comparable to or surpassing human experts. Additionally, predictive models for patient outcomes and disease progression, such as those used in sepsis prediction, have demonstrated improved sensitivity and specificity, with some models achieving a reduction in false positive rates by up to 30%.

The innovative aspect of this research lies in its comprehensive approach to cataloging and evaluating AI algorithms, providing a clear overview of the current landscape and identifying key areas for future development. However, the study acknowledges limitations, including the variability in algorithm performance across different populations and the need for extensive validation in diverse clinical settings. Furthermore, the ethical considerations surrounding data privacy and algorithmic bias remain significant challenges that require ongoing attention.

Future directions for this research include the clinical validation and deployment of these AI algorithms in real-world healthcare environments. This will necessitate collaboration between technologists, clinicians, and regulatory bodies to ensure that AI tools are not only effective but also safe and equitable for all patient populations.

For Clinicians:

"Exploratory study, sample size not specified. Highlights AI's potential in diagnostics and care. Lacks clinical validation and real-world application data. Cautious optimism warranted; further trials needed before integration into practice."

For Everyone Else:

"Exciting AI research in healthcare, but it's still early. It may take years before it's available. Keep following your doctor's advice and don't change your care based on this study alone."

Citation:

The Medical Futurist, 2025. Read article →